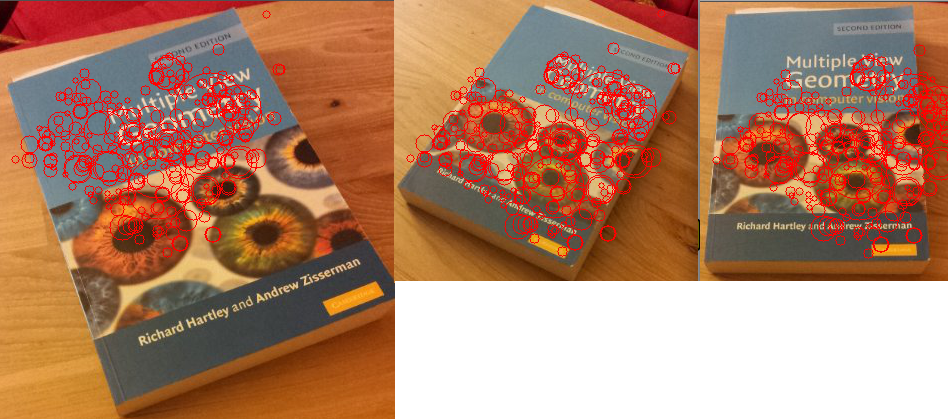

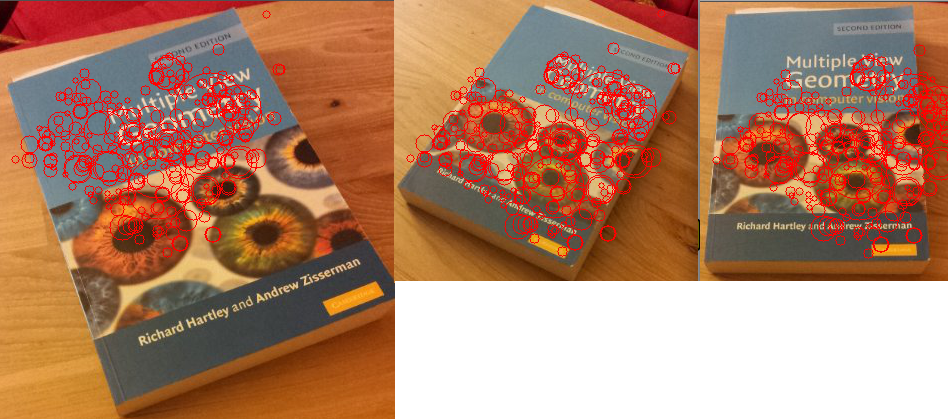

This is a test of SURF features (http://en.wikipedia.org/wiki/SURF, claimed to be faster than the original SIFT feature descriptors) on Python OpenCV.

Parameters used were: (0, 300, 3, 4)

0: (basic, 64-element feature descriptors)

hessian threshold: 300

number of octaves: 3

number of layers within each octave: 4

Time taken for execution: 0.108652 seconds on a 249×306 image (MacBook Air 2.13 GhZ, Core 2 Duo, 4 GB RAM)

import cv

# SURF Feature Descriptor

#

# Programmed by Jay Chakravarty

import cv

from time import time

if __name__ == '__main__':

cv_image1=cv.LoadImageM("mvg1.jpg")

cv_image2=cv.LoadImageM("mvg2.jpg")

cv_image1_grey = cv.CreateImage( (cv_image1.width, cv_image1.height), 8, 1 )

cv.Zero(cv_image1_grey)

cv_image2_grey = cv.CreateImage( (cv_image2.width, cv_image2.height), 8, 1 )

cv.Zero(cv_image2_grey)

#cv.ShowImage("mvg1", cv_image1)

#cv.ShowImage("mvg2", cv_image2)

cv.CvtColor(cv_image1, cv_image1_grey, cv.CV_BGR2GRAY);

#cv.ShowImage("mvg1_grey", cv_image1_grey)

cv.CvtColor(cv_image2, cv_image2_grey, cv.CV_BGR2GRAY);

#cv.ShowImage("mvg2_grey", cv_image2_grey);

#CvSeq *imageKeypoints = 0, *imageDescriptors = 0;

# SURF descriptors

#param1: extended: 0 means basic descriptors (64 elements each), 1 means extended descriptors (128 elements each)

#param2: hessianThreshold: only features with hessian larger than that are extracted. good default value is

#~300-500 (can depend on the average local contrast and sharpness of the image). user can further filter out

#some features based on their hessian values and other characteristics.

#param3: nOctaves the number of octaves to be used for extraction. With each next octave the feature size is

# doubled (3 by default)

#param4: nOctaveLayers The number of layers within each octave (4 by default)

tt = float(time())

#SURF for image1

(keypoints1, descriptors1) = cv.ExtractSURF(cv_image1_grey, None, cv.CreateMemStorage(), (0, 300, 3, 4))

tt = float(time()) - tt

print("SURF time image 1 = %g seconds\n" % (tt))

#print "num of keypoints: %d" % len(keypoints)

#for ((x, y), laplacian, size, dir, hessian) in keypoints:

# print "x=%d y=%d laplacian=%d size=%d dir=%f hessian=%f" % (x, y, laplacian, size, dir, hessian)

# draw circles around keypoints in image1

for ((x, y), laplacian, size, dir, hessian) in keypoints1:

cv.Circle(cv_image1, (x,y), int(size*1.2/9.*2), cv.Scalar(0,0,255), 1, 8, 0)

cv.ShowImage("SURF_mvg1", cv_image1)

tt = float(time())

#SURF for image2

(keypoints2, descriptors2) = cv.ExtractSURF(cv_image1_grey, None, cv.CreateMemStorage(), (0, 300, 3, 4))

tt = float(time()) - tt

print("SURF time image 2= %g seconds\n" % (tt))

# draw circles around keypoints in image2

for ((x, y), laplacian, size, dir, hessian) in keypoints2:

cv.Circle(cv_image2, (x,y), int(size*1.2/9.*2), cv.Scalar(0,0,255), 1, 8, 0)

cv.ShowImage("SURF_mvg2", cv_image2)

cv.WaitKey(10000)